Content Cleanup: How We Acquired 100k Users on Search by Updating Old Content

In the first quarter of 2021, we ran 7 experiments on content maintenance to measure the ROI of refreshing old SEO content. Here's everything we learned.

Kapwing is a web-based video editor committed to democratizing content creation with fast, collaborative, and highly accessible tools. Since our founding in 2018, our acquisition strategy has centered on organic Google Search. SEO is vital to the ways we identify user needs, target press opportunities, and design our landing pages. The library of “long-tail” Resources articles introduces creators to Kapwing and covers topics ranging from simple meme tutorials to web accessibility guidance to spotlights of creative experts.

In 2019, after graduating from college with an English degree, I joined Kapwing as the first Content Marketing hire to focus on the Resources Library. My manager – the cofounder/CEO – believed that writing original, in-depth tutorials on content creation topics would differentiate our video editing software from our competition and attract new creators looking for help on Google. I wrote over 200 articles within my first year at Kapwing and studied the basics of SEO, and by the end of 2019 we had millions of creators who discovered the editor through our Resources Library.

Kapwing’s Content Team designed most of our SEO processes in-house from first principles and experimentation. For example, we have created and refined a proprietary prioritization algorithm to rank new topic ideas by SEO opportunity. Until this year, however, we had struggled to form any strategies for content maintenance. In Q1, we ran 7 informal experiments on content maintenance to measure the ROI of refreshing old content.

In this article, I’ll share the plan we came up with at the beginning of the year, the content updates we performed, the results of our update experiments, and the impact this project had on our future content strategy.

Background

At Kapwing, we mostly write tutorials for creators using search for guidance on common editing tasks, but our topic range is broad. For example, I’ve recently written articles about recording Minecraft gameplay for beginners and creating channel trailers for YouTube. We take pride in producing high-quality content in house that serves our target creator and the creator ecosystem with insights, news, and in-depth coverage.

We know, however, that our content gets stale over time. Technical instructions go out of date due to changes in our product, speech updates, and evolutions of the creator ecosystem. Of course, the seasonal “tides” of Search and the delay of the Google index make it hard to map the quality of the content to ranking changes. Because we believe that, as a general rule, the best, most fresh content wins in Search, we felt that keeping evergreen content up to date was important for our community of creators and for our own growth.

But keeping every one of our articles up to date is an unrealistic, Herculean task. Our Resources library contains over 800 articles, dating back to September 2018. Maintaining all of our content would require an untenable effort, since new content is constantly being written. Instead, we needed a more refined strategy to identify opportunities and systematically update content when it’s worth the investment.

Our Hypothesis

We hypothesized that regularly updating old content could, in some cases, lead to outsized returns on acquiring new creators and improving our site’s domain authority. To validate this strategy, we needed a repeatable approach, a clear scope, and ROI analysis to see what types of updates had the greatest impact and could be implemented consistently in the future.

SEO bloggers like Neil Patel and Moz had different ideas about the best ways to audit and maintain old content. Informed by their ideas and our own hunches, I grouped our updates to understand what strategies bring the best results. Then, over 8 weeks, I executed the content audits one by one. 30 days after each update, I analyzed the results in Google Search Console and Google Analytics and compared the impact across experiments to recommend an action plan for content maintenance.

Our SEO Experiments

To start, I’ll define what we consider success. Before conducting the audit, we decided that a 10% improvement in the “key metric” would count as a successful experiment and that we would use the 10% threshold to inform our content maintenance going forward. My high-level goal for the whole project was to reach the 10% benchmark in 4 out of the 7 experiments, proving our hypothesis and giving us confidence in our future updates.

Method: To track results, we created a Google Sheet with tabs for each of the experimental groups. Before conducting the audits, I pulled recent data for that group of articles for the previous 30 days from Google Analytics or Search Console, depending on the data we needed to track. Then, 30 days after the updates were complete, I pulled the post-update data from the same source.

Of course, this experiment process was informal rather than rigorous. There was some overlap between the experimental groups (although we tried to minimize this by manually removing double-counted articles). I did not do an A/B test with a control group, but instead looked at the 30 days before and after the audit. As a result, factors like seasonal traffic fluctuations skewed the results, so I focused on more stable outcomes like average search rank and click-through rate. But my goal was to gain practical guidance rather than attain scientific accuracy, so I wanted to share my findings here for other content marketers in the same position.

After initial research I came up with a list of 7 different ways to identify articles that could benefit most from updates, with minimal overlap and identifiable metrics for improvement:

1. Low Click-Through Rate: Articles with a CTR under 1% that rank in the top 30.

Problem: People see these articles in the Google Search results, but don’t click to our website.

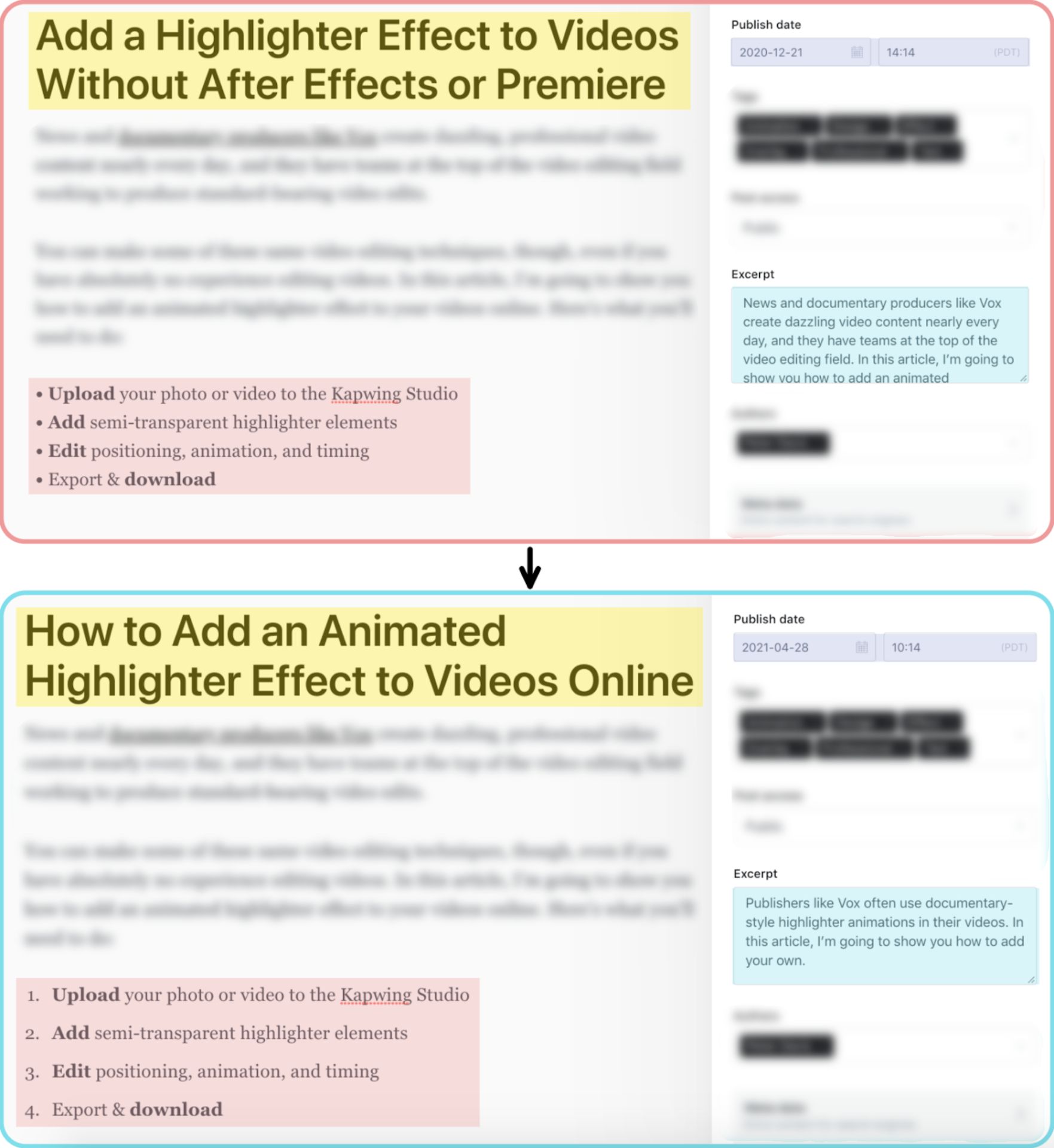

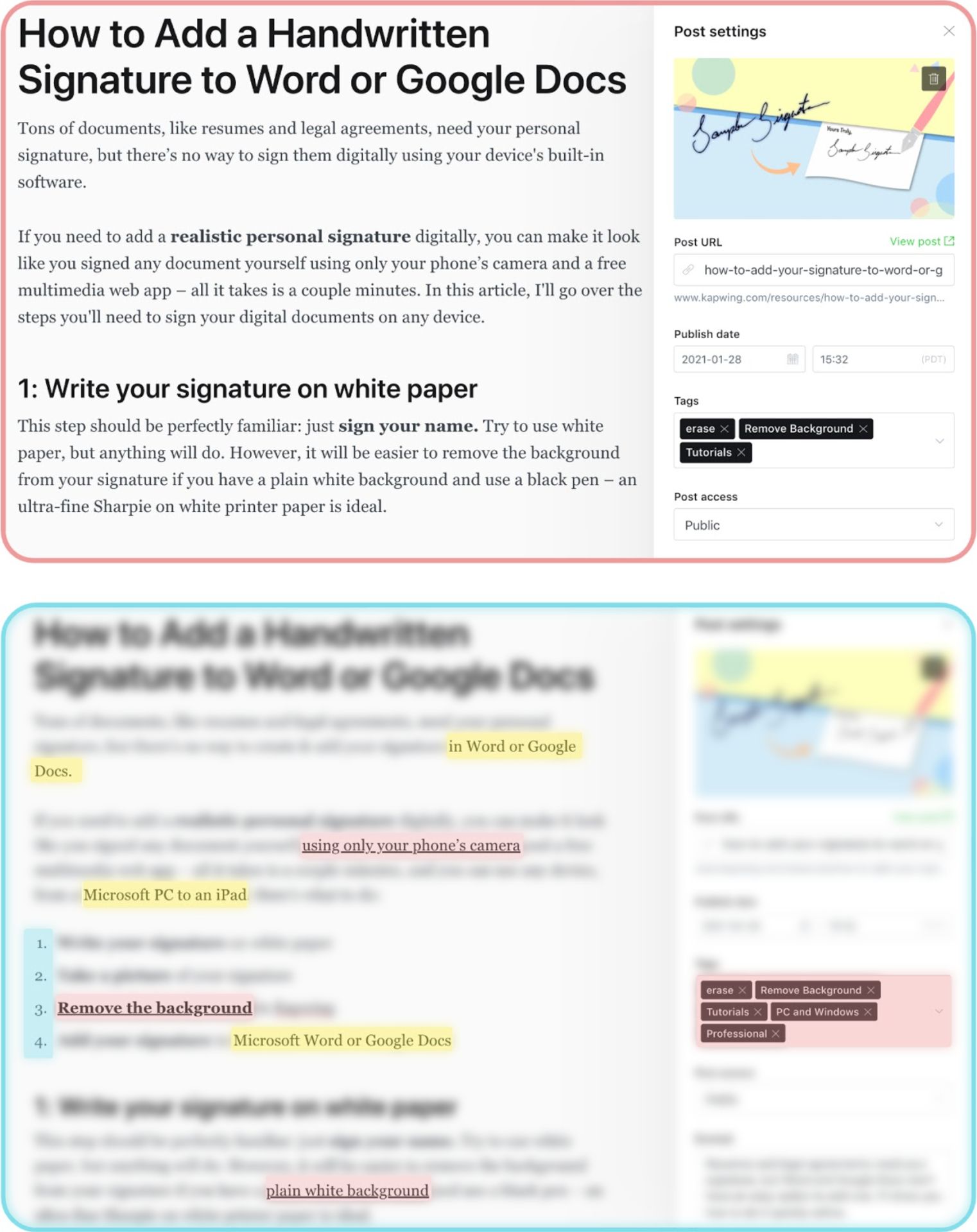

Updates: Change title, excerpt, and meta-tags to refine what the searcher sees in Google results. Refresh references and links. Add structured data like numbered lists to improve rank and be identified for Google snippets. Update publication date when >100 words of edits are made.

Key metric: Click-through rate.

Data scrape: I exported the data from Search Console and removed all rows that were better than 30 in average search position. Then, I identified pages with <1% click-through rate (CTR).

2. Previously Strong: Pages that registered a reduction in clicks over 30% in a month, while still receiving at least 30 clicks.

Problem: We know these articles performed well in the past, so we can update them to better serve the present. The severe dropoff indicates that information or products have changed, or other relevant pages have begun to rank higher.

Updates: New technical information, up-to-date contextual linking and screenshots, and new relevant keywords (for example, adding the words “Instagram Reels” since the new platform launched). If I changed at least 100 words of content, I updated the publication date.

Key metric: Average position

Data scrape: Data exported from Search Console, filtered by clicks, and refined by %reduction in clicks.

3. High Traffic, Low Conversion: Content that received more than 1000 visitors in a month, but converted under 3% of visitors to use our product.

Problem: For these articles, Google was sending us traffic, but the content wasn’t effectively engaging users.

Updates: Ensure that the beginning of the article matches searcher’s intent. Improve the call to action, making intros more clear, and making sure images are up to date. Often, calls to action were added earlier and made to stand out clearly from text.

Key metric: Conversion rate (how many people who read this article clicked through to use our editing tools).

Data scrape: Pulled URLs from Google Analytics and identified rows that had <3% goal completion rate and more than 1000 monthly visitors. Kapwing set up a “goal” in Google Analytics for users who begin creating a video in our editor.

4. High Conversion, Low Rank: pages that convert over 25% of visitors, but have an average search rank over 10.

Problem: Although most potential users don’t see this article on the front page of Search, the people who do read the article find it useful and informative.

Updates: Enhancements to improve Search rank: keyword richness, numbered lists, cross-linking, alt text, and updated publication dates.

Key metric: Average rank position.

Data scrape: Pulled URLs from Google Analytics on articles that ranked outside of the top 10, but converted over 25% of users to use our tools.

5. High Bounce Rate: Articles with over 500 sessions and a bounce rate over 80%.

Problem: These pages attract clicks from Search, but don’t convert those clicks into more engaged user sessions.

Updates: To avoid bounced sessions, I focused on creating effective calls to action, while also adding valuable contextual links and improving overall article structure, images, and clarity to keep users engaged in the article. These updates had the largest scope of all experiments, involving in-depth edits to nearly the entire article.

Key metric: Bounce rate, measured through Google Analytics. A session bounces when a user leaves our domain without visiting any other pages.

Data scrape: Articles that had a bounce rate over 80% and more than 500 unique sessions in a month, according to Google Analytics data.

6. Articles that contain broken links

Problem: A page’s SEO is hurt when Google can’t follow all its links to determine how helpful or authoritative they are.

Updates: I used the SEMRush Site Audit crawler to identify every time a link in our Resources Library couldn’t be followed, either 404’ing or redirecting too many times. Then I either fixed typos, changed the destination link, or redirected a defunct URL to an updated link.

Key metric: Average search rank.

Data scrape: Identified pages with Internal Link errors in the SEMrush Site Audit tool.

7. Outdated Content: Articles from 2018 that reflected our current product focus, but lack up-to-date information.

Problem: These articles need updates to be useful for readers, but they’re relevant to our current needs.

Updates: New contextual links, better formatting for our new CMS, new graphic assets of our tools, and updated tutorials with current Kapwing workflows.

Key metric: Average search rank.

Data scrape: Manual.

The Winners

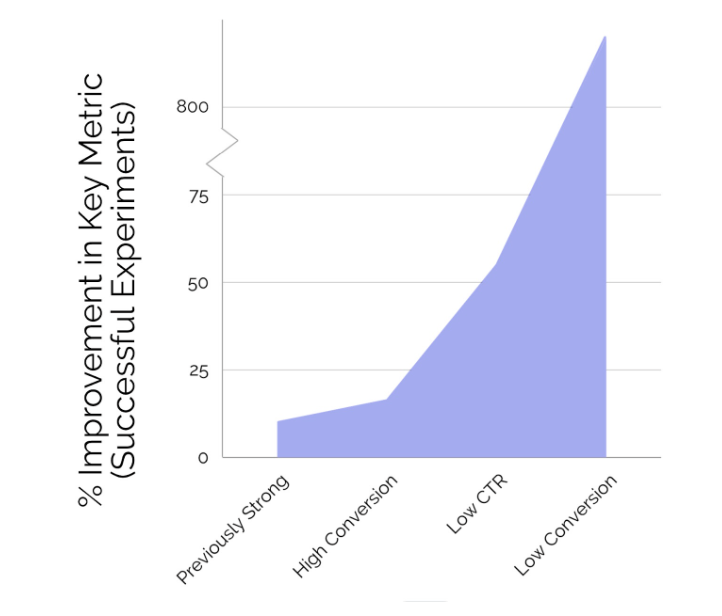

In the end, 4/7 experiments showed more than 10% improvement:

- Low CTR

- Previously Strong

- High Traffic, Low Conversion

- High Conversion, Low Rank

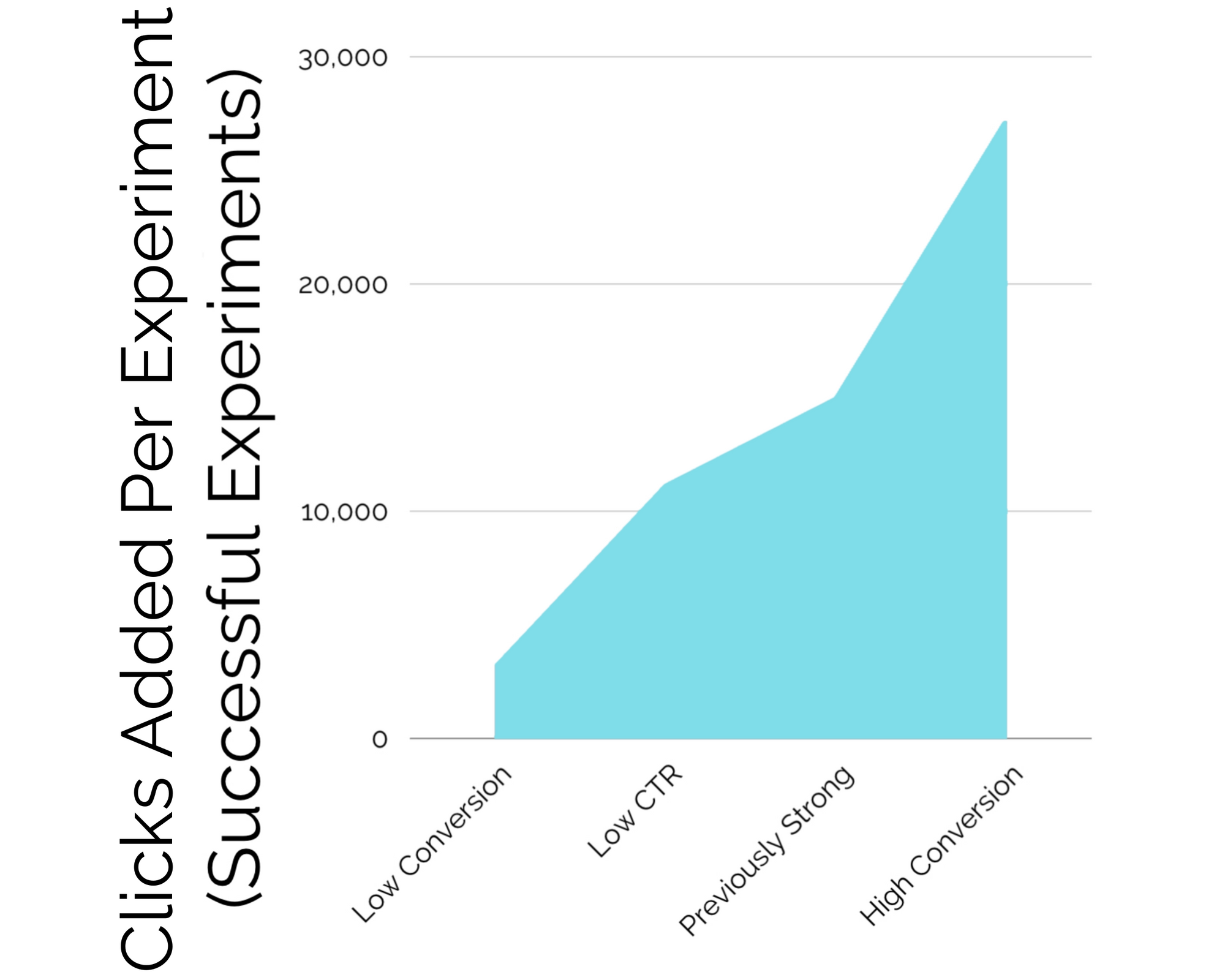

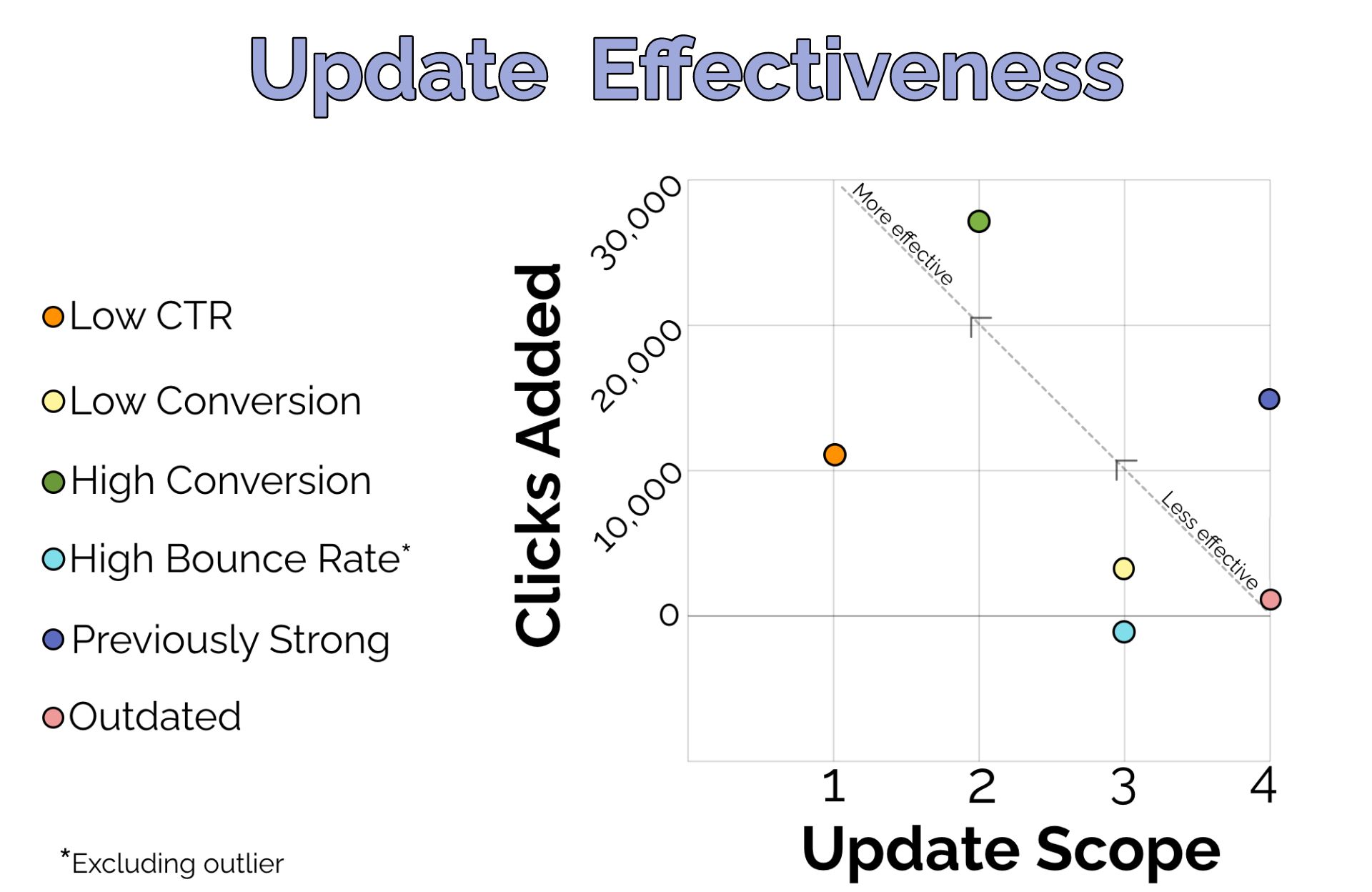

Experiment 4 – High Conversion, Low Rank – made the greatest overall impact. Our content audit generated nearly 850 new clicks per article updated per week. This was by far the highest figure among all update groups. Plus, since these articles successfully convert readers to begin using our product, each click in this batch brought an especially high value to Kapwing. The key metric in this update, average search rank, improved by 16.4%, from 27.5 to 21.5.

Experiment 3 – High Traffic, Low Conversion – resulted in by far the largest improvement in its key metric of conversion rate (readers who went on to use Kapwing). However, since the articles selected had exceptionally low conversion values (many started at 0%), gains were comparatively easy to accomplish. The conversion rate of these articles ballooned from an average of 0.65% to 6.04%, an increase of 829%. Since we usually measure an article’s overall impact on our business by the number of users it converts, this update had a significantly lower overall impact than the other winners, as 6.04% is roughly half of the average for our articles.

Experiment 2 – Previously Strong Content – barely passed the bar for experiment success, improving search rank by an average of 10.2%. However, this modest improvement in search rank paid off in traffic, as average clicks per article increased by over 2x. In the end, this made roughly half as much impact as Experiment 4.

Experiment 1 – Low Click-Through Rate – improved articles’ CTR from an average of .62% to an average of .96% (+55%). Since these updates were relatively quick (copy changes to the title, excerpt, metadata, and general formatting), this experiment had an especially high ROI. Overall, average rank improved by 13% for these articles, and we got about 1/3 as many new clicks as in the most successful experiment.

The Losers

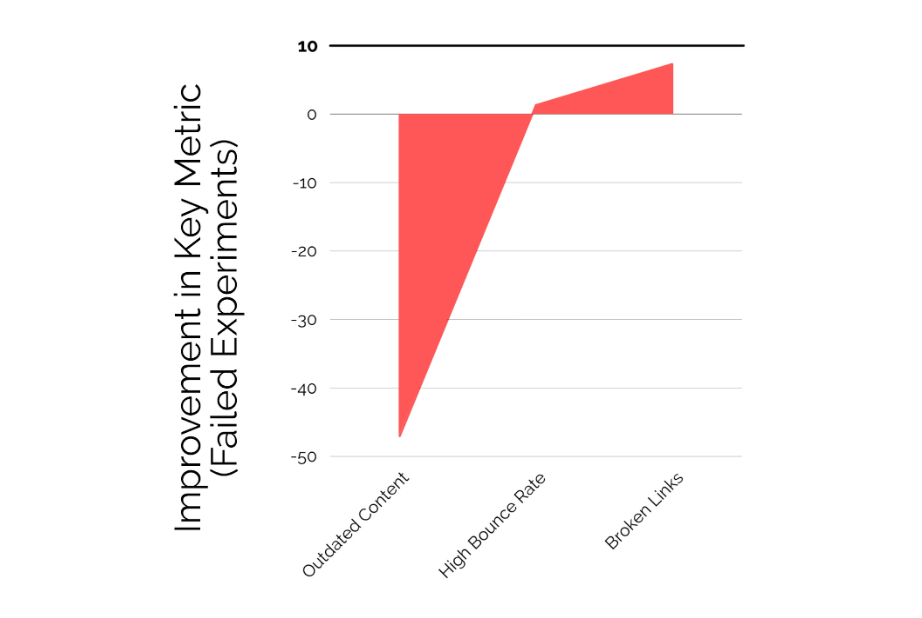

Experiments 5, 6, and 7 failed to move the needle significantly:

- Broken Links

- High Bounce Rate

- Outdated Content updates

Experiment 6 – High Bounce Rate – only impacted bounce rate slightly, by about 1%. So, overall, it did next to nothing to improve the page’s SEO, and the experiment failed to reach 10% improvement.

Experiment 5 – Broken Links – took very little work compared to the other updates, and it made a very small impact, as well. In the end, we saw a 7.3% improvement in rank across all articles in this group. Promising, but simply not enough to meet our benchmark for success.

Experiment 7 – Outdated Content – showed the least promise of all our experiments. Since this grouping didn’t actually group articles by specific or measurable deficiencies, it was unclear exactly what changes needed to be made, why improvement was needed, or how to apply the lessons of this experiment to future updates. In the end, this experiment added nearly 1000 new clicks in total, but the average rank of the articles updated actually increased by over 40% (though this was influenced greatly by a single outlier).

What We Learned

In the end, we decided to make a regular practice of executing 4 of the 7 update types – but not the exact 4 that met our bar for success.

Since the Low Conversion Rate updates didn’t significantly affect much other than conversion rate, and required a high scope of work per update, its out-of-the-park key metric success didn’t end up adding nearly as much value as the High Conversion Rate, Low Clickthrough Rate, and Previously High-Performing updates.

So, in the end, we used these three most effective update strategies, plus a fourth: broken link fixes. There was no definitive way to gauge the success of fixing broken links beyond average search rank, but what we know about SEO tells us that it’s a good idea to make these updates a habit for the overall health of our domain.

All together, our plan going forward is to commit about a week of work to these updates, split among four or five people, twice per year. Most of our focus needs to remain on creating new content, improving our content strategy, and honing our brand identity, so we want to implement our content maintenance practices as efficiently as possible. Each of these experiments took me about a week without any prior experience or guidance, so our expectation is that as a team of four or five, we can expect to complete them in just three or four days.

Ultimately, there’s no certainty that what works for our site’s SEO content maintenance will work for yours, or anyone else’s. I hope our experience gives you a starting point to explore your own content maintenance practices. It proved to give us a sizable SEO boost, so with the right combination of open experimentation and quantitative measurement, it can work for your SEO strategy, too.