Deepfake Videos Have Gone Mainstream—Now What?

High-quality deepfake videos are more popular than ever.

Not everyone realizes how simple they can be to make. In this post, we hope to educate readers about the state of deepfake videos today: how easy it is to make one, how prevalent they are throughout social media, and how convincing they can be thanks to the latest developments in AI.

An example of an educational AI persona that can be generated for free, in just a few clicks

This discussion about deepfake videos stems from firsthand experience. While developing a recent feature called AI Personas within Kapwing, we understood that many of the new AI projects could also be used for nefarious purposes. As part of our work, we feel obligated to share our research and experience with a wider audience. Our goal is to share what’s possible and what to look out for so that people can remain alert to abuse, impersonation, and fake news, especially with an election season coming up in the US.

Creating a high quality video deepfake is easier than you think

Many smart people on the internet seem to believe that realistic video deepfakes are still relatively difficult to make. Sam Gregory, the executive director of the human rights non-profit WITNESS, recently told Salon that "full-face deepfakes in real-life scenes remain challenging to do really well."

Some credible sources overstate the amount of expertise and training media that deepfake makers need to create convincing videos. For example, a 2024 article by Sara Jodka states, “Deepfakes are created using a significant amount of data, including photos, soundbites, or videos of a target person.”

Both of these statements are outdated.

On Kapwing, you can make a deepfake in just a few clicks. We made this tool available for educational purposes.

Deepfakes were originally created using GANs trained on large amounts of data, so creating a high quality deepfake video used to be accessible only to developers able to run scripts on specialized hardware. However, new tech has made it possible to create deepfakes "zero shot," a technical phrase meaning that a realistic video can be made from just 10 seconds of footage, instantly, without any additional data.

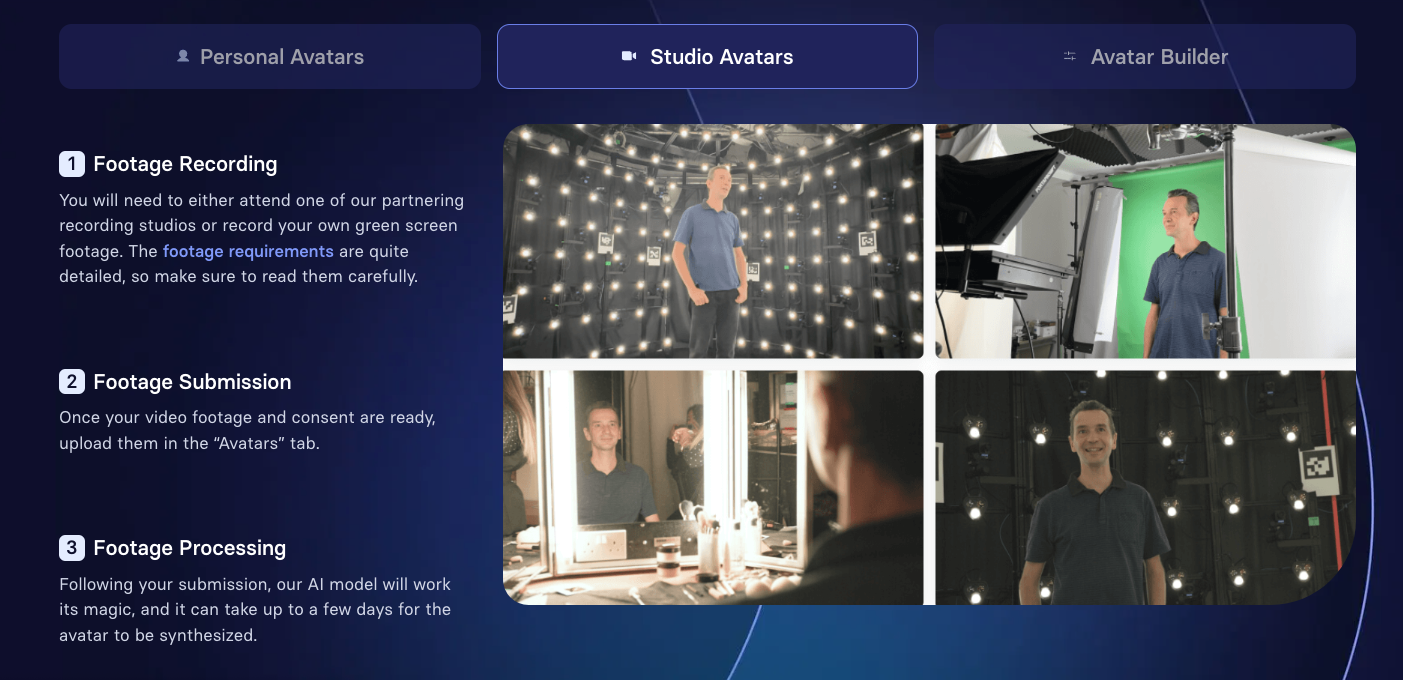

Even popular companies that market a custom AI avatar service, such as Synthesia or HeyGen, require at least 2 minutes of consistent video footage, where the subject is stationary, reading a specific script, while making very limited gestures and other movements. In addition, the avatar creation process can take a significant amount of time - for example, Synthesia claims, “A personal avatar takes 1 business day to create once the footage is submitted.”

New developments in open-source voice cloning and lip syncing technologies, however, make creating a realistic deepfake much faster. An AI twin can be created with just 10 seconds of initial footage and produce an output in minutes, not days. They also allow creators to create deepfakes with any type of footage, even if it includes movement or gestures.

To illustrate how easy it can be for anyone to use this technology, we created an educational deepfake video maker on Kapwing. This tool can be used to generate highly realistic deepfakes in a few clicks for free - but for educational purposes only. For safety reasons, a non-removable watermark will be added to all educational outputs to clarify that they are AI-generated.

Our hope for launching this in Kapwing is to showcase what’s possible with the latest developments in AI, and educate creators on how easy it might be for someone to use these technologies. These videos can be made without significant data, training, or time, and for free, and the tools are based on open source technology. That tech exists whether it’s in Kapwing or not.

Deepfakes are more prevalent than you think

Deepfakes are no longer a technology limited to a dark corner of the internet. Video deepfakes have gone mainstream - they are embraced on social media, and are almost never labeled as clearly AI-generated. While many of the most viral ones are presented as satire, there are plenty of unsuspecting commenters who are fooled as well.

One video deepfake recently went viral, depicting a fake Joe Biden speech after dropping out of the presidential race:

🚨Breaking Now🚨: President Joe Biden makes his first video address following dropping out of the Presidential race. pic.twitter.com/oIE69FHXdf

— Prison Mitch (@MidnightMitch) July 21, 2024

Other popular posts on X include a fake interview segment of Kevin Durant, congratulating the creator on reaching a certain number of followers:

No way Kevin Durant congratulated me on hitting 100K..

— NBACentel (@TheNBACentel) August 25, 2024

Thank you @KDTrey5 🫡🔥 pic.twitter.com/yN4pfyv0oS

Like satirical synthetic media of the past (like the Baka Mitai meme and Obama mashups), this content format has the ingredients to go viral. They are believable.

Improvements to two key technologies in recent weeks have combined to make modern deepfakes much easier to make:

- Voice cloning works by taking someone’s voice from a small sample of audio (usually only 10-15 seconds) and applying the vocal characteristics to an underlying model that can generate speech from any text. The generated audio file is then combined with a real video of someone using lip sync.

- Lip sync AI works by matching a person’s lips to the sounds made in an audio file, and adjusting the mouth to match what’s being said. A big part of this process is also centered around the visual quality of the lips, and making them match the speaker’s face.

As part of our research, our team sought to find out the prevalence of deepfakes amongst creators and which popular figures were targeted. Is this format more popular for celebrities, influencers, or politicians?

Donald Trump, Elon Musk and Taylor Swift are the most deepfaked celebrities in 2024

Even when created as a joke or art piece, deepfaking raises social and ethical concerns for celebrity and non-celebrity targets. “It feels like a violation,” as UK Channel 4 newsreader and deepfake victim Cathy Newman puts it.

We set out to identify the influential public figures most commonly targeted by deepfakes in 2024 by analyzing the number of text-to-video prompts on a popular AI video Discord channel for 500 influential public figures in American culture. Research shows that most deepfakes are made to “influence public opinion, enable scam or fraudulent activities, or to generate profit," so we think it's important that consumers know what to look out for.

Click the colored tabs in the table below to reveal the most prompted deepfake celebrities in each category.

The three most deepfaked celebrities show how interconnected the strands of top-tier celebrity culture in America truly are. In January, explicit deepfakes of Taylor Swift on Elon Musk’s X platform received 45 million views and 24,000 reposts in just 17 hours. Just a few months later, Donald Trump — the most deepfaked celebrity of all, with 12,384 AI video prompts in 2024 — shared images falsely showing Swift to be a Trump voter, leading to accusations of misinformation.

Along with fourth-placed President Biden, Trump, Musk and Swift have been deepfaked significantly more often than anyone else. The highest profile Biden deepfakes occurred when political consultant Steve Kramer, apparently with the help of a nomadic street magician called Paul Carpenter, cloned the president’s voice for a wave of robocalls to local voters ahead of the New Hampshire primaries.

Biden has been deepfaked 3.45 times as often as fifth-placed Tom Cruise. As the archetypal charismatic action film star, Cruise is an obvious starting place for both casual AI hobbyists and fraudsters wishing to exploit the public’s trust. Cruise was also the target of an infamous viral deepfake, for which Cruise look-alike Miles Fisher casually transplanted faces with the Mission Impossible star, gaining four million TikTok views in two days. More recently — and more seriously — a Kremlin-affiliated group deepfaked Cruise in a video intended to tarnish the reputation of the Paris Olympics.

“The video, which falsely purported to be a Netflix documentary narrated by the familiar voice of American actor Tom Cruise, clearly signaled the content’s creators committed considerable time to the project and demonstrated more skill than most influence campaigns we observe,” reported Microsoft’s Threat Analysis Center (MTAC).

How you can detect modern deepfakes

Detecting modern deepfakes involves paying close attention to both the audio and the video - specifically the voice and the lips. Below we share our strategies to help you the difference between real and fake:

1. Inconsistent movement of lips and teeth

Watch Katherine's mouth closely to see limitations in the AI's ability to match the mouth and teeth movements to a more natural format.

Lip syncing technology works by first downscaling the mouth area, matching lip movement to the audio, then upscaling the lips back to match the speaker’s face. While different algorithms use different approaches, the general process often results in imperfections that become easier to detect if you know what to look for.

Modern deepfakes can sometimes have a blurrier mouth area, or have unnaturally pink lips and white teeth. Teeth are rather difficult for AI to create well, and you can often distinguish real videos from fake ones by the movement and consistency of the teeth when they are visible.

2. Unnatural body movements and gestures

In this video, you can tell that the gestures and body movements don't quite match up to what's being said. This is an important detail that is hard to change with deepfakes.

While lips and mouth movements can be made to look real, body movements or gestures are generally not changed. Thus, deepfaked videos may contain mismatched shrugs, head nods, or hand gestures used to emphasize a certain point.

If someone is looking away while speaking, for example, that might suggest that it was created with AI. Face distortions, no blinking or unnatural blinking, and cropped effects around the mouth, eyes, and neck are other good places to look if you’re wondering if a video could be AI generated.

3. Inexpressive voices and unusual breathing patterns

Listen carefully to the voice - it's not quite as natural sounding as my real one.

Because these deepfakes are often made with AI generated voices, listen to the audio carefully for clues on whether a video is deepfaked or not. Cloned voices tend to sound more monotone and less expressive than a real human. Listen carefully to the breathing patterns and inflections of the voice.

Cloned voices may also not have any background noises, like a car driving by, or rain on a window, which could be another clue. These might give a clearer picture of whether the backing audio is AI generated.

Is it illegal to make a deepfake?

No - right now, there’s no law that explicitly bans deepfakes. However, according to copyright law, it’s illegal to use someone’s likeness commercially without their permission. There are major open questions about what is permissible or not.

Many of the examples of videos that have gone viral are satirical and shared for entertainment purposes. In the Copyright Act of 1976, Congress wrote the principles of fair use into law, stating that criticism, news reporting, teaching, and scholarship should not constitute an “infringement of copyright.”

Deepfakes may also constitute defamation if used to harm someone’s reputation, like the case of a school principal who was fired due to a fake audio recording of himself making racist remarks made by a disgruntled coworker. However, U.S. defamation law distinguishes between public figures and private persons, and a deep fake of Elon Musk, for example, may be considered permissible even if it harms his reputation.

The law has not caught up with the ease of creating highly realistic deep-fakes. Most state laws that prohibit unauthorized synthetic media focus on non-consensual pornography, but few have laws concerning generative AI specifically. At the same time, people have been altering videos for decades, and applicable legal frameworks may include defamation, false light, trade libel, infliction of emotional distress, and the right to publicity.

This excellent report by the Co-Creation Studio at MIT covers these issues and more. As the technology improves, it may be prudent to establish what exactly is permitted or not under the law.

What now?

It’s clear that creating video deepfakes has already gone mainstream. The popularity of these videos amongst creators, as well as the ease with which they can be created, is only continuing to grow. Like any technology developments, however, they wielded positively or nefariously. Our team’s stance is that educating the public about this technology is the key way to combat bad actors. As part of this campaign, we hope readers will try out the technology to see for themselves how easy it can be to use, as well as how high quality the outputs can truly be.

These tools need to be made available in a safe way. On Kapwing, educational personas contain a non-removable watermark. We hope that other providers of a similar service can also make it clear when content is altered, and take steps to ensure safety by making it difficult for bad actors to abuse the tech.

Watermarked generation that shows what's possible with today's tools.

In addition to the tools, platforms like YouTube, Instagram, and X have an important responsibility to add checks and labels for AI generated content before it can do damage. For example, preventing fake news or financial scams early on, before the posts go viral, will be an important problem. Having expert review for questionable videos could be another strategy for moderation. Finally, educating the public on what’s possible and how to detect fakes themselves is another important piece of the puzzle.

While the word “deepfake” might carry some negative connotations, it’s also important to remember that there are many legitimate use cases for the technology that powers them. On Kapwing for example, hundreds of creators have used AI Personas to scale out their own content production for things like social media, training videos, marketing videos, educational courses, and much more. The technology itself is not inherently bad or dangerous - the issue, like most things in AI, is what humans choose to do with it. We hope that we can play a small role in sharing its pros and cons, and educating readers on what’s possible.