Our AI Art Generator Isn't Being Used To Generate Art

When the VQGAN+CLIP image and video generation techniques started trending on Twitter earlier this month, I was excited to try it out for myself. I've always been interested in the intersection with between technology and art, and it felt like the perfect opportunity to create an easy way to share this passion with others. Since I work at Kapwing, an online video editor, making an AI art and video generator seemed like a project that would be right up our alley. The problem? When we made it possible for anyone to generate art with artificial intelligence, barely anyone used it to make actual art. Instead, our AI model was forced to make videos for random inputs, trolling queries, and NSFW intents.

If you're not familiar with VQGAN+CLIP, it's a recent technique in the AI field that people makes it possible to make digital images from a text input. The CLIP model was released in January 2021 by Open AI, and opened the door for a huge community of engineers and researchers to create abstract art from text prompts.

We knew that not everyone might know how to access these models, so we hacked together a hosted version that didn't require coding knowledge, access to GPUs, or waiting for installations. The original intent of the AI video generator was just to create an easy way for anyone to try out AI art generation techniques and not have it be relegated to a select group of engineers or researchers. We put it online and launched the generator on Hacker News, Reddit, Product Hunt where it garnered thousands of visits in aggregate.

The generator we made works by inputting any text prompt. For example, you could have the model make an image of a "sunrise on the ocean". A random seed was used each time, which meant that every work generated would be completely unique. We were excited to see what kind of things people would type to try out generating art, and from what we saw on Twitter, the expectation was that we might get many art focused or poetic inputs. We did get some great ones, and we were initially excited about inputs like surreal ideas amidst a maelstrom of color in the style of art nouveau, the cat is sleeping under the promethean cloud, or even astronaut floating in pink outerspace.

Unfortunately, the vast majority of queries relegated our model to spend time generating images for random, trolling-type inputs or inputs with NSFW intent. These queries would connect random concepts in a specific way, such as: rabbit eating human, mike tyson as a clown on a building, moms spaghetti.

These random inputs don't reflect an art-making intent, but hey, it's the internet right? The problem, however, is that the narrative seems to suggest that art generation was the main intent of these types of models, but the reality was a huge departure. The use of generative models for things outside of abstract art is not talked about enough.

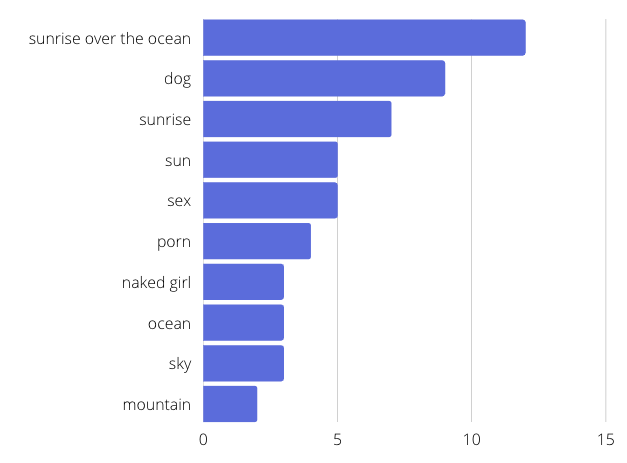

In addition to these random inputs, a large number of inputs would express NSFW intent, such: naked woman, perverts of every shape and size moved ghoulishly along as i wandered throu, thong bikini covered in chocolate, gay unicorn at a funeral, and many more that are too explicit to include in this article. In a informal classification of 200 queries, we found that only around 5% were art-related, while ~15% had NSFW intent. Seeing a high volume of NSFW queries that were so specific was eye-opening and disappointing to us, as we originally aspired for the generator to be a source for original artwork and NFTs, and instead it felt it was being abused by the internet. Out of the thousands of unique inputs, many of the top inputs (outside of the defaults) were NSFW-related:

What's further surprising about this is that VQGAN+CLIP was never designed to generate truly explicit content, which begs the question of the intent of these users. Is is that the internet just craves NSFW content so much that they will type it anywhere? Or do people have a propensity to try to abuse AI systems? Either way, the content outputted must have disappointing to these users, as most of the representations outputted by our models were abstract.

For example, here is what the system outputted for "naked woman":

For other NSFW queries, the outputs were similar to above - representative of the subject but more abstractly disturbing than explicit. The image is somewhat suggestive, but I can imagine that the searcher was disappointed. More than anything, however, I have to question why our generator was being forced to generate this type of content. Most of our top of funnel usage came from tech focused outlets, such as Hacker News and Product Hunt, where most written feedback was excited about using the generator for art and NFTs.

This experience led us to consider shutting down the generator just a few days after launching as it no longer served its original mission: to make AI art generation more accessible. Rather, the generator and our GPU resources were being used to make AI generated adult content and fulfill trolling requests.

In writing this article, I hope to raise awareness for the potential for AI to be abused. If one of our good-hearted tools can be misused so quickly, I worry that AI developments from Open AI or other top researchers could be similarly abused or used for all the wrong reasons.

While the data from our launch is not a formal study, it still raises questions about what AI can be potentially used for. When the narrative around VQGAN+CLIP is too focused around the beautiful art-related use cases, it ignores the reality that a model like VQGAN+CLIP is open to abuse and can be repurposed for things that the original creators may not have intended.

Thanks for reading! Feel free to message me on Twitter with your thoughts. Just adding a plug if you made it this far - if you're interested in working on creative software for video creators, we at Kapwing are hiring. Our product makes video editing easy, modern, and collaborative.