Why Can't AI Draw Hands (and Other Human Features)?

AI-generated art is infamous for getting certain human features wrong. In this article, we dive into why AI still struggles with these features.

Generative artificial intelligence has grown by leaps and bounds in the last two years. What was once a niche and highly technical application has become incredibly accessible. Now anyone can log onto ChatGPT or Midjourney and create code, content, or even art.

Generating AI art has been a particularly fascinating aspect of this technology. In just seconds, AI art generators like Midjourney can turn a prompt into beautiful and even realistic art. At least, some of the time.

For all the amazing capabilities of AI art generators there’s still a major area of struggle — creating realistic people.

AI art generators just can’t seem to produce realistic renderings of human hands, teeth, and eyes, for reasons we’ll get into below. The results can be funny, but they can also be downright creepy.

Take a look at these images generated by Midjourney when prompted to render a group of five people laughing at a party in a realistic style.

Midjourney delivered what was asked yet there’s something unsettling about the image. Some of the hands aren’t quite right, the smiles are too toothy, and the eyes just seem dead.

Let’s take a closer look at why AI image generators still have trouble with particular human features, why we find it so off-putting, and how you can address these issues in your own work with AI.

The most common human features that AI gets wrong

Of all the human features that AI image generators don’t get quite right, there are three that stand out the most. We’ll break down each one and examine why they’re so difficult for AI to master.

AI hands

Of all the things AI generated art gets wrong, human hands are the most notorious. In fact, one of the easiest ways to tell if an image of people was generated by AI is to examine the hands — are there too many fingers? Are the fingers at strange angles? Are the hands oddly small or large?

In this example, I asked Midjourney for a portrait image of a woman holding an umbrella. Here are two of the results:

The first image is mostly perfect, but you’ll notice that the woman’s hand has six fingers. In the second image, the AI tried to create two hands with pretty upsetting results. The fingers are all over the place and at odd angles even though the rest of the image, like the face and dress, have amazing detail.

Sometimes these mistakes are a little more subtle. Below, I asked Midjourney for an image of a man holding a wand.

The total number of fingers is correct — if you ignore the fact that it rendered the thumb as a second index finger. All five digits are weirdly long and sausage-like and the grip is all wrong. The palm and wrist are also too small and at a strange angle.

As you can see, asking Midjourney to show hands performing an action, such as grasping an object, appears to be difficult for the tool to capture. But even asking for images of plain hands leads to bizarre results.

Here, I asked for close-up images of hands wearing pink nail polish, and the results speak for themselves:

Too many fingers, wrists and knuckles bending at impossible angles, fingers merging into each other — all rendered in photorealistic quality, down to the last wrinkle and veins visible through the delicate skin on the backs of the hands.

Why AI art generators can’t get hands right

The reasons that AI art generators can’t seem to make hands look normal are actually a fascinating example of the limitations of AI.

The first big reason is that AI art generators simply aren’t humans. They’re trained on a data set of thousands or millions of images, but they don’t necessarily have an understanding of what a hand actually is. The AI doesn’t know that hands are supposed to have four fingers and a thumb, or the general proportions of fingers and palms.

It’s the same reason why if you ask Midjourney for an image of an octopus, you might get a hyperrealistic image with incredible detail and dimension — but not eight tentacles.

It also can’t understand the subtleties of how hands move and bend. A human artist can look at their own hand, or the hand of the model, from any angle and position, taking the time to understand how the shapes change. AI just knows what it learned from static images in its data set, which it then combines to create a new image.

That brings up the next issue. These generators have not taken in enough information about hands to get them right. Not only are there relatively fewer images of them, but they tend to be at all different kinds of angles, making it harder for the AI to understand different positions with any depth.

If you were to train an AI art generator exclusively on millions of images of hands holding umbrellas, you might get better results, but that’s not what current generators are working with.

Lastly, there’s an element of human bias. When an AI generated image of a hand is off, we notice right away because we've spent our whole lives observing our own hands and the hands of other people. We know what they look like, how they move, and how they don't.

By contrast, when AI takes liberties with, for example, the texture of a shirt or the numbers of points on a maple leaf, we’re less likely to notice.

AI teeth

Another very human aspect AI art generators struggle with is teeth. In that first image above of the people at a party, you can see the teeth look creepy. There’s too many teeth in each mouth, and they’re too bright and prominent.

Here, we see it again when I asked for a close up of a woman smiling with teeth. The results are a bit better, but if you look closely there’s still too many teeth, the shapes aren’t quite right, and they're not rendered with the same dimension and perspective as other features, looking cartoonish and flat.

Why AI art generators have difficulty with teeth

The reasons why AI art generators mess up when it comes to teeth are similar to why they can’t get hands right.

The AI is able to figure out that teeth are white, shiny, and come in rows, but it doesn't know exactly how many teeth the average person has. It has an easier time with toothy smiles because data sets tend to have many photos of smiling people, but if you get closer you’ll probably see issues.

AI eyes

The issues with AI generating human eyes can be far subtler than too many fingers, but still unnerving.

Sometimes, the eyes will look good at a glance, but on closer inspection, the pupils are offset, the eyes are slightly crossed, or there's drooping or smearing at the corners. In this example, there's something wrong with the left eye in each image:

Notice that the mistakes persist across a spectrum of styles from photorealism to painterly. Because AI-generated images are the AI's interpretation of a written prompt and visual input it's been trained on, it can incorrectly interpolates eye size, gaze direction, visual perspective, etc.

Sometimes, however, there's nothing overtly wrong with AI-generated eyes but they still look "off."

In the below example, we can see subtle imperfections that nevertheless set off alarm bells. In one image, the shadows are off, making the eyes look flat. In another, the eyes appear too orblike and glassy, more like a doll than a human.

The large close-up of the blue eye is incredibly detailed, down to the crumbles of mascara on the individual eyelashes. But there's no pupil. Easily missed at a glance, since the inside of the eye is solid and dark, but on closer inspection, there's no clear delineation between iris and pupil which gives the eye an unsettling, voidlike appearance.

As pieces of art, these faces and eyes are certainly beautiful — but they don’t feel real. And the more photorealistic the rest of the piece, the more obvious dead, AI-generated eyes can appear.

Why AI eyes are so strange

The main reason why you can tell these eyes aren’t real comes down to the human brain's incredible capacity for pattern recognition. In our interactions with other people, we see real human eyes in every expression, angle, lighting, and variation.

Our brains have a lifetime of data to immediately recognize an emotion expressed in just the eyes. While we don't consciously recognize these patterns, we know when what we're seeing doesn't align with our existing data. If even something small is off, we can tell.

AI just isn't capable (yet) of consistently replicating the subtleties in human eyes in a way that makes them realistic.

Why AI images are so unsettling

We’ve talked about how humans are highly sensitive to when prominent features like hands, teeth, and eyes don’t feel quite right. But why does it make us feel so uneasy?

One hypothesis that explains this phenomenon is called the “uncanny valley.” It’s the idea that when we recognize that something human-like isn’t in fact human, it sets off alarm bells in our minds.

You may have experienced this looking at human-like robots, realistic dolls, or even apes and monkeys with features that resemble humans.

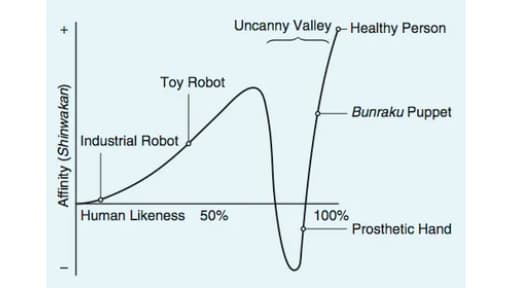

The concept of the uncanny valley was first introduced by Japanese robotics professor Masahiro Mori.

This theory proposes that there is a proportional relationship between something's human-like appearance and our affinity for it (aka, how comfortable it makes us feel) – up to a certain point. Once an object, image, or creature reaches a specific threshold of human likeness, our affinity takes a nosedive.

The “valley” that this steep drop off creates represents the cognitive dip of seeing something that approaches human likeness but we recognize as distinctly inhuman. If you feel unsettled or even repulsed by a humanoid image or object, you’re in the valley.

You know it when you see it.

Interestingly, movement significantly steepens the slopes of the uncanny valley.

The Sophia robot by Hanson Robotics elicited far more of that quintessential uneasiness associated with the valley than any DALL-E or Midjourney images could.

One possible explanation is that we are evolutionarily primed to prefer fellow humans that have markers of good health. When something’s off in the eyes, face or small muscular movements, we’re predisposed to recognize that as something unsafe. Another theory is that these human-like images and objects force us to confront our fear of death.

This is all theoretical, but it’s a fascinating explanation for why AI art of humans can make us feel strange when we look at it. Having too many fingers or teeth, or expressionless eyes, can heighten that feeling.

How can we avoid creepy AI-generated images?

If we know that AI struggles with particular human features, what can we do about it?

Well, first, the good news is that AI models are improving all the time. As applications like Midjourney and DALL-E add more images to their data sets, they can further refine their outputs. Since the programmers of these applications know that hands, teeth, and eyes are difficult, there’s motivation to focus on feeding more images of these features into data sets. Basically, you’ll just have to wait.

Being as specific as possible with your prompts when using AI image generators can also help deliver better results. Sometimes, though, you’re just inevitably going to get something weird.

The best approach in this case is to return to human intervention and use photo editing software to correct any minor mistakes. That could mean moving around some teeth, or editing out an extra finger. Until AI catches up, human artists will still need to intervene.

Come for the art, stay for the uncanny valley

Although it seems like generative AI is everywhere, it's only become widely accessible relatively recently. The average person can now access AI tools for everything from generating videos to editing videos to creating stunning art. You’ve probably even casually used generative AI on social media.

These applications, especially art generators, are still in their infancy and programmers work every day to improve them. As AI gets better at learning and expands its data sets, there will be some hiccups along the way. Hands, teeth, and eyes are just a few examples of that, as are AI hallucinations.

There may well come a time when AI art generators can make perfect hands with the right number of fingers, but in the meantime enjoy the ride.

AI art FAQs

Why can’t AI draw hands?

Hands are hard for AI art generators because these tools are only as good as their data sets. Because hands are so complex, and because AI doesn’t actually understand how many fingers a hand should have, it makes mistakes. As these tools improve and study more images, it's expected that they’ll only get better at drawing hands.

Why does AI generated art look weird?

AI art generators are still imperfect and are only as good as the data they’ve collected. If AI art makes you feel weird or uneasy, it may be because it falls into the “uncanny valley,” a theory that when something is human-like, but not human-like enough, our instinct is to reject it.

Why do AI images have weird human features?

AI art generators are still learning how to properly render human features like hands, teeth, and eyes. That’s because these tools are making images based on scanning millions of other images — they don’t actually understand the subtle shapes and movements that define human features.

What are AI-generated images based on?

AI image generators are fed millions of other images, which it learns from. For example, if you ask for a picture of a smiling woman, the output is based on every image the AI has identified as a woman smiling.

Additional Resources: